Why Can’t I Hold All These RSS Feeds

I’ve mentioned my woes with my RSS reader off and on in posts here, but I almost had another one. Thankfully, I learned my lesson last time. I ended up breaking my Fresh RSS install. I came across this post on Hacker News, where someone had asked for people to post their personal blogs. Someone had set up an OPML Feed for this list and stuck it on GitHub. I thought to myself, “Why not, I like these types of people, surely there are some good things in here”.

So I hooked the OPML up to my Fresh RSS. This tripled how many feeds I was subscribed to. It also broke my reader. I don’t know exactly what happened, but it stopped updating feeds, and would not even load the main page. I did some investigation and found that one of the SQL tables had become corrupted. THANKFULLY it was not the one with the feeds themselves. Literally everything else can be rebuilt if needed, easily, but recovering the feed list is paramount. I immediately created an export dump of the feed list. After some troubleshooting, I completely deleted the Fresh RSS database, then reloaded a months old backup, then reimported the recent feed list tables.

The only thing that was missing, I had added some categories since the last backup. I created some dummy categories, “Category 32, Category 33”, that sort of thing. Due to the relational way databases work, feeds automatically fell into these categories, which allowed me to figure out what the actual category name was. For example, one has some comic and book feeds in it, so clearly, this was originally my “Books and Comics” category.

Eventually, I’ll weed some of these feeds out. There are some in languages I don’t understand, nothing personal, but I have plenty to read without hassling with translations. Some feeds tend to post TOO MUCH and dominate the RSS reader. I’m pretty relentless about chopping these and Hacker News is pretty much the only one that floods, that I allow to remain. Techmeme and Slashdot are sometimes borderline but not usually, so they get to stay as well.

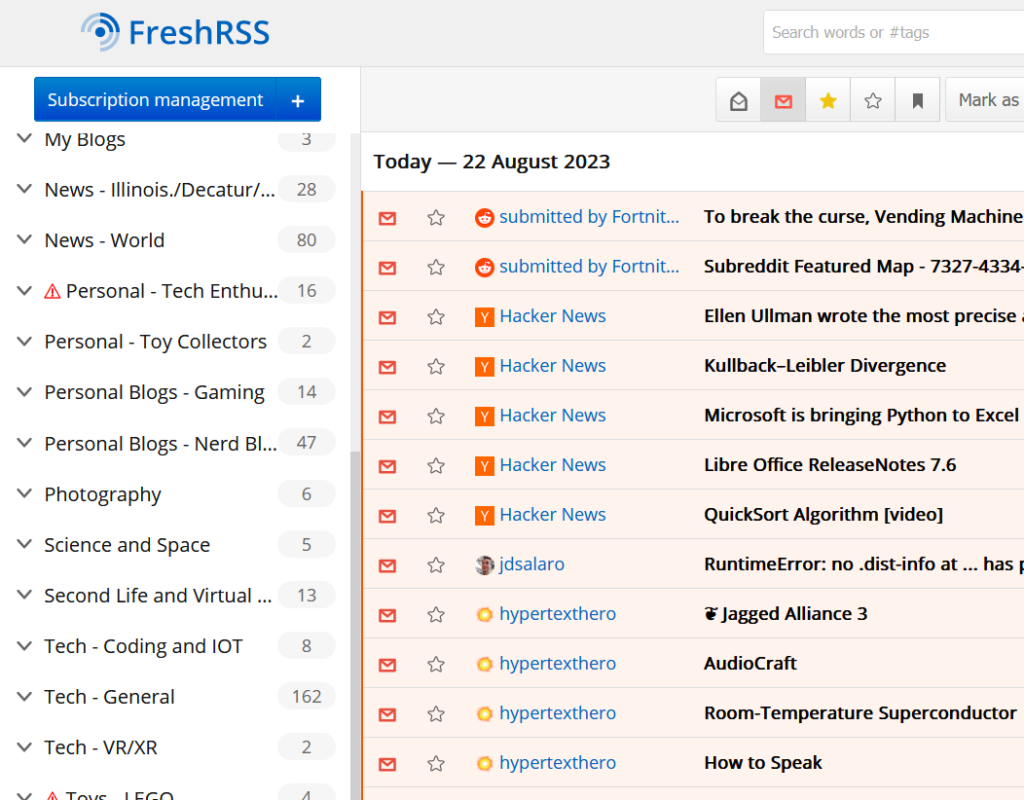

Everything is sorted into categories, and I usually read through in category chunks, and no, I don’t read everything, I skim for interesting headlines or updates from my favorites and read those. I can’t find a good number for how many feeds but I think it’s just over 1200 now, sorted out across categories. Currently, I use the following categories.

- Anime/Japan

- Books and Comics

- Food

- Friend’s Blogs

- Games – Deal and Bundles

- Games – Tabletop

- Games – VG News

- Games – VG Reviews

- Language Learning

- Lifestyle and Family

- Movies/TV

- Music

- My Blogs

- News – Conservative Bull Shit (Currently all Muted)

- News – Illinois/Decatur/Local

- News – Liberal Opinions

- News – US News (Empty, they all end up in World)

- News – World

- PersBlogs – Tech Enthusiasts

- PersBlogs – Toy Collectors

- PersBlogs – Gaming

- PersBlogs – Nerd Blogs

- Photography

- Science/Space

- Second Life and Virtual Worlds

- Tech – Coding and IT

- Tech – Crypto Bullshit

- Tech – General

- Tech – Security

- Tech – VR/XR

- Toys – Transformers

- Toys – LEGO

- Toys – News

- Uncategoriezed

- Webcomics

- Writing and Writers

This is essentially the gamut of my interests, and sometimes if a category becomes too unwieldy, I’ll break out some of the feeds into a refined category. Which is where the prefixes come from (News, Tech, PersBlogs, Toys).

I mentioned before, I mostly read in the category view. Anything I find interesting I’ll tag with either BI or Lameazoid tags, and then my news digest script goes to work, I think at 11 PM each night. I don’t always check it every day, which is fine, sometimes I check it 2-3 times a day. Often while eating breakfast, sometimes again in the evening.

Josh Miller aka “Ramen Junkie”. I write about my various hobbies here. Mostly coding, photography, and music. Sometimes I just write about life in general. I also post sometimes about toy collecting and video games at Lameazoid.com.