Code Project – Goodreads RSS to HTML (Python)

I already have my Letterboxed watches set up to syndicate here, to this blog, for archival purposes mostly. When I log a movie in Letterboxed, a plug-in catches it from the RSS feed and makes a post. This is done using a plug-in called “RSS Importer” They aren’t the prettiest posts, I may look into adjusting the formatting with some CSS, but they are there. I really want to do the same for my Goodreads reading. Goodreads lists all have an RSS feed, so reason would have it that I could simply, put that feed into RSS Importer and have the same syndication happen.

For some reason, it throws out an error.

The feed shows as valid and even gives me a preview post, but for whatever reason, it won’t create the actual posts. This is probably actually ok, since the Goodreads RSS feed is weird and ugly. I’ll get more into that in a bit.

I decided that I could simply, do it myself, with Python. One thing Python is excellent for is data retrieval and manipulation. I’m already doing something similar with my FreshRSS Syndication posts. I wanted to run through a bit of the process flow here though I used for creating this script. Partially because it might help people who are trying to learn programming and understand a bit more about how creating a program, at least a simple one, actually sort of works.

There were some basic maintenance tasks needing to be done. Firstly, I made sure I had a category on my WordPress site to accept the posts into. I had this already because I needed it trying to get RSS Importer to work. Secondly, I created a new project in PyCharm. Visual Studio Code works as well, any number of IDEs work, I just prefer PyCharm for Python. In my main.py file, I also added some commented-out bit at the header with URLs cut and pasted from Goodreads. I also verified these feeds actually worked with an RSS Reader.

For the actual code there are basically three steps to this process needed:

- Retrieve the RSS feed

- Process the RSS Feed

- Post the processed data.

Part three here, is essentially already done. I can easily lift the code from my FreshRSS poster, replace the actual post data payload, and let it go. I can’t process data at all without data to process, so step one is to get the RSS data. I could probably work it out also from my FreshRSS script, but instead, I decided to just refresh my memory by searching for “Python Get RSS Feed”. Which brings up one of the two core points I want to make here in this post.

Programming is not about knowing all the code.

Programming is more often about knowing what process needs to be done, and knowing where and how to use the code needed. I don’t remember the exact libraries and syntax to get an RSS feed and feed it through Beautiful Soup. I know that I need to get an RSS feed, and I know I need Beautiful Soup.

My search returned this link, which I cribbed some code from, modifying the variables as needed. I basically skimmed through to just before “Outputting to a file”. I don’t need to output to a file, I can just do some print statements during debugging and then later it will all output to WordPress through a constructed string.

I did several runs along the way, finding that I needed to use lxml instead of xml in the features on the Beautiful Soup Call. I also opted to put the feed URL in a variable instead of directly in the code as the original post had it. It’s easy to swap out. I also did some testing by simply printing the output of “books” to make sure I was actually getting useful data, which I was.

At this point, my code looks something like this (not exactly but something like it:

import requests

from bs4 import BeautifulSoup

feedurl = "Goodreads URL HERE"

def goodreads_rss(feedurl):

article_list = [] try:

r = requests.get(feedurl)

soup = BeautifulSoup(r.content, features='lxml')

books = soup.findAll('item')

for a in books:

title = a.find('title').text

link = a.find('link').text

published = a.find('pubDate').text

book = {

'title': title,

'link': link,

'published': published

}

book_list.append(book)

return print(book_list)

print('Starting scraping')

goodreads_rss()

print('Finished scraping')I was getting good data, and so Step 1 (above) was done. The real meat here is processing the data. I mentioned before, Goodreads gives a really ugly RSS feed. It has several tags for data in it, but they aren’t actually used for some reason. Here is a single sample of what a single book looks like:

<item>

<guid></guid>

<pubdate></pubdate>

<title></title>

<link/>

<book_id>5907</book_id>

<book_image_url></book_image_url>

<book_small_image_url></book_small_image_url>

<book_medium_image_url></book_medium_image_url>

<book_large_image_url></book_large_image_url>

<book_description>Written for J.R.R. Tolkien’s own children, The Hobbit met with instant critical acclaim when it was first published in 1937. Now recognized as a timeless classic, this introduction to the hobbit Bilbo Baggins, the wizard Gandalf, Gollum, and the spectacular world of Middle-earth recounts of the adventures of a reluctant hero, a powerful and dangerous ring, and the cruel dragon Smaug the Magnificent. The text in this 372-page paperback edition is based on that first published in Great Britain by Collins Modern Classics (1998), and includes a note on the text by Douglas A. Anderson (2001).]]></book_description>

<book id="5907">

<num_pages>366</num_pages>

</book>

<author_name>J.R.R. Tolkien</author_name>

<isbn></isbn>

<user_name>Josh</user_name>

<user_rating>4</user_rating>

<user_read_at></user_read_at>

<user_date_added></user_date_added>

<user_date_created></user_date_created>

<user_shelves>2008-reads</user_shelves>

<user_review></user_review>

<average_rating>4.28</average_rating>

<book_published>1937</book_published>

<description>

<img alt="The Hobbit (The Lord of the Rings, #0)" src="https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/books/1546071216l/5907._SY75_.jpg"/><br/>

author: J.R.R. Tolkien<br/>

name: Josh<br/>

average rating: 4.28<br/>

book published: 1937<br/>

rating: 4<br/>

read at: <br/>

date added: 2011/02/22<br/>

shelves: 2008-reads<br/>

review: <br/><br/>

]]>

</description>

</item>Half the data isn’t within the useful tags, instead, it’s just down below the image tag inside the Description. Not all of it though. It’s ugly and weird. The other thing that REALLY sticks out here, if you skim through it, there is NO “title” attribute. The boot title isn’t (quite) even in the feed. Instead, it just has a Book ID, which is a number that, presumably, relates to something on Goodreads.

In the above code, there is a line “for a in books”, which starts a loop and builds an array of book objects. This is where all the data I’ll need later will go, for each book. in a format similar to what is show “title = a.find(‘title’).text”. First I pulled out the easy ones that I might want when later constructing the actual post.

- num_pages

- book_description

- author_name

- user_rating

- isbn (Not every book has one, but some do)

- book_published

- img

Lastly, I also pulled out the “description” and set to work parsing it out. It’s just a big string, and it’s regularly formatted across all books, so I split it on the br tags. This gave me a list with each line as an entry in the list. I counted out the index for each list element and then split them again on “: “, assigning the value at index [1] (the second value) to various variables.

The end result is an array of book objects with usable data that I can later build into a string that will be delivered to WordPress as a post. The code at this point looks like this:

import requests

from bs4 import BeautifulSoup

url = "GOODREADS URL"

book_list = []

def goodreads_rss(feed_url):

try:

r = requests.get(feed_url)

soup = BeautifulSoup(r.content, features='lxml')

books = soup.findAll('item')

for a in books:

print(a)

book_blob = a.find('description').text.split('<br/>')

book_data = book_blob[0].split('\n ')

author = a.find('author_name').text

isbn = a.find('isbn').text

desc = a.find('book_description').text

image = str(a.find('img'))

title = str(image).split('"')[1]

article = {

'author': author,

'isbn': isbn,

'desc': desc,

'title': title,

'image': image,

'published': book_data[4].split(": ")[1],

'my_rating': book_data[5].split(": ")[1],

'date_read': book_data[7].split(": ")[1],

'my_review': book_data[9].split(": ")[1],

# Uncomment for debugging

#'payload': book_data,

}

book_list.append(article)

return book_list

except Exception as e:

print('The scraping job failed. See exception: ')

print(e)

print('Starting scraping')

for_feed = goodreads_rss(url)

for each in for_feed:

print(each)And a sample of the output looks something like this (3 books):

{'author': 'George Orwell', 'isbn': '', 'desc': ' When Animal Farm was first published, Stalinist Russia was seen as its target. Today it is devastatingly clear that wherever and whenever freedom is attacked, under whatever banner, the cutting clarity and savage comedy of George Orwell’s masterpiece have a meaning and message still ferociously fresh.]]>', 'title': 'Animal Farm', 'image': '<img alt="Animal Farm" src="https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/books/1424037542l/7613._SY75_.jpg"/>', 'published': '1945', 'my_rating': '4', 'date_read': '2011/02/22', 'my_review': ''}

{'author': 'Philip Pullman', 'isbn': '0679879242', 'desc': "Can one small girl make a difference in such great and terrible endeavors? This is Lyra: a savage, a schemer, a liar, and as fierce and true a champion as Roger or Asriel could want--but what Lyra doesn't know is that to help one of them will be to betray the other.]]>", 'title': 'The Golden Compass (His Dark Materials, #1)', 'image': '<img alt="The Golden Compass (His Dark Materials, #1)" src="https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/books/1505766203l/119322._SX50_.jpg"/>', 'published': '1995', 'my_rating': '4', 'date_read': '2011/02/22', 'my_review': ''}

{'author': 'J.R.R. Tolkien', 'isbn': '', 'desc': 'Written for J.R.R. Tolkien’s own children, The Hobbit met with instant critical acclaim when it was first published in 1937. Now recognized as a timeless classic, this introduction to the hobbit Bilbo Baggins, the wizard Gandalf, Gollum, and the spectacular world of Middle-earth recounts of the adventures of a reluctant hero, a powerful and dangerous ring, and the cruel dragon Smaug the Magnificent. The text in this 372-page paperback edition is based on that first published in Great Britain by Collins Modern Classics (1998), and includes a note on the text by Douglas A. Anderson (2001).]]>', 'title': 'The Hobbit (The Lord of the Rings, #0)', 'image': '<img alt="The Hobbit (The Lord of the Rings, #0)" src="https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/books/1546071216l/5907._SY75_.jpg"/>', 'published': '1937', 'my_rating': '4', 'date_read': '2011/02/22', 'my_review': ''}I still would like to get the Title, which isn’t an entry, but, each Image, uses the Book Title as its alt text. I can use the previously pulled-out “image” string to get this. The image result is a complete HTML Image tag and link. It’s regularly structured, so I can split it, then take the second entry (the title) and assign it to a variable. I should not have to worry about titles with quotes being an issue, since the way Goodreads is sending the payload, these quotes should already be removed or dealt with in some way, or the image tag itself wouldn’t work.

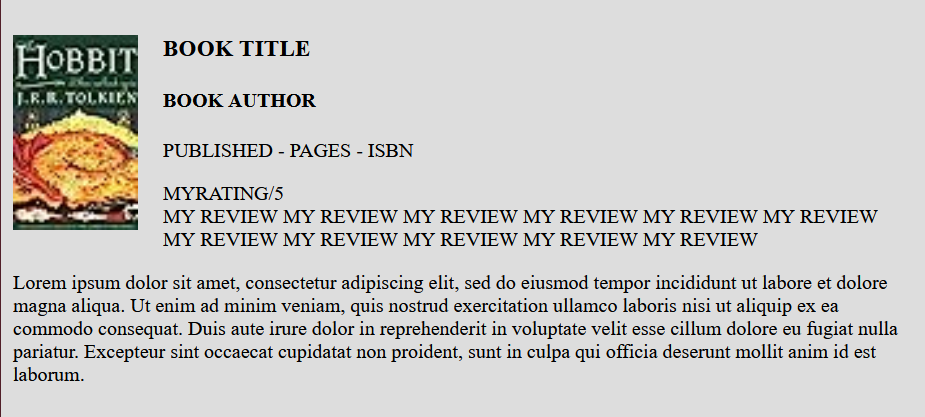

title = str(image).split('"')[1]I’m not going to go super deep into the formatting process, for conciseness, but it’s not really that hard and the code will appear in my final code chunk. Basically, I want the entries to look like little cards, with a thumbnail image, and most of the data pulled into my array formatted out. I’ll mock up something using basic HTML code independently, then use that code to build the structure of my post string. It will look something like this when finished, with the variables stuck in place in the relevant points, so the code will loop through, and insert all the values:

post_array = []

for each in for_feed:

post = f'<div class="book-card"> <div> <div class="book-image">' \

f'{each["image"]}' \

f'</div> <div class="book-info"> <h3 class="book-title">' \

f'{each["title"]}' \

f'</h3> <h4 class="book-author">' \

f'{each["author"]}' \

f'</h4> <p class="book-details">' \

f'Published: {each["published"]} | Pages:{each["pages"]}' \

f'</p> <p class="book-review">'

if each["my_rating"] != "0":

post += f'My Rating: {each["my_rating"]}/5<br>'

post+= f'{each["my_review"]}' \

f'</div> </div> <div class="book-description"> <p class="book-summary">' \

f'Description: {each["desc"]}' \

f'</p> </div> </div>'

print(post)

post_array.append(post)I don’t use all of the classes added, but I did add custom classes to everything, I don’t want to have to go back and modify my code later if I want to add more formatting. I did make a bit of simple CSS that can be added to the WordPress custom CSS (or any CSS actually, if you just wanted to stick this in a webpage) to make some simple cards. They should center in whatever container they get stuck inside, in my case, it’s the WordPress column.

.book-card {

background-color: #DDDDDD;

width: 90%;

margin: 20px auto;

padding:10px;

border: solid 1px;

min-height: 200px;

}

.book-image {

float: left;

margin-bottom: 10px;

margin-right: 20px;

width:100px;

}

.book-image img {

width: 100%;

object-fit: cover;

}

.book-info {

margin: 10px;

}The end result looks something like this. Unfortunately, the images in the feed are tiny, but that’s ok, it doesn’t need to be huge.

Something I noticed along the way, I had initially been using the “all books” RSS feed, which meant it was giving all books on my profile, not JUST read books. I switched the RSS feed to “read” and things still worked, but “read” only returns a maximum of 200 books. Fortunately, I use shelves based on year for my books, so I can go through each shelf and pull out ALL the books I have read over the years.

Which leads me to a bit of a split in the process.

At some point, I’ll want to run this code, on a schedule somewhere, and have it check for newly read books (probably based on date), and post those as they are read.

But I also want to pull and post ALL the old reads, by date. These two paths will MOSTLY use the same code. For the new books, I’ll attach it to the “read” list, have it check the feed, then compare the date added in the latest entry, entry [0], to the current date. If it’s new, say, within 24 hours, it’ll post the book as a new post.

Change of plans. Rather than make individual posts, I’m going to just generate a pile of HTML code, and make backdated posts for each previous year. Much simpler and cleaner. I can then run the code once a year and make a new post on December 31st. Goodreads already serves the basic purpose of “book tracking”, I mostly just want an archive version. It’s also cleaner looking in the blog and means I don’t need to run the script all the time or have it even make the posts itself.

For the archive, I’ll pull all entries for each of my yearly shelves, then make a post for all of them, replacing the “published date” on each with the “date added” date. Because I want the entries on my Blog to match the (approximate) finished date.

I think, we’ll see.

I’ve decided to just strike out these changes of plans. After making the post, I noticed the date added, is not the date read. I know the yearly shelves are accurate, but the date added is when I added it, probably from some other notes at a later date. Unfortunately, the RSS feed doesn’t have any sort of entry for “Date Read” even though it’s a field you can set as a user, so I just removed it. It’s probably for the best, Goodreads only allows one “Date Read,” so any books I’ve read twice, will not be accurate anyway.

This whole new plan of yearly digests also means in the end I can skip step 3 above. I’m not making the script make the posts, I can cut and paste and make them manually. This lets me double-check things. One little bit I found, there was an artifact in the description of some brackets. I just added a string slice to chop it off.

I guess it’s a good idea to at some point mention the second of the two points I wanted to make here, about reusing code. Programming is all about reusing code. Your own code, someone else’s code, it doesn’t matter, code is code. There are only so many ways to do the same thing in code, they are all going to look generically the same. I picked out bits from that linked article and made them work for what I was doing, I’ll pick bits from my FreshRSS poster code, and clean it up as needed to work here. I’ll reuse 90% of the code here, to make two nearly identical scripts, one to run on a schedule, and one to be run several times manually. This also feeds back into point one, knowing what code you need and how to use it. Find the code you need, massage it together into one new block of code, and debug out the kinks. Wash, rinse, repeat.

The output is located here, under the Goodreads category.

Here is the finished complete script:

url = "GOODREADS URL HERE"

import requests

from bs4 import BeautifulSoup

book_list = []

def goodreads_rss(feed_url):

try:

r = requests.get(feed_url)

soup = BeautifulSoup(r.content, features='lxml')

books = soup.findAll('item')

for a in books:

# print(a)

book_blob = a.find('description').text.split('<br/>')

book_data = book_blob[0].split('\n ')

author = a.find('author_name').text

isbn = a.find('isbn').text

pages = a.find('num_pages').text

desc = a.find('book_description').text[:-3]

image = str(a.find('img'))

title = str(image).split('"')[1]

article = {

'author': author,

'isbn': isbn,

'desc': desc,

'title': title,

'image': image,

'pages': pages,

'published': book_data[4].split(": ")[1],

'my_rating': book_data[5].split(": ")[1],

'date_read': book_data[7].split(": ")[1],

'my_review': book_data[9].split(": ")[1],

# Uncomment for debugging

#'payload': book_data,

}

book_list.append(article)

return book_list

except Exception as e:

print('The scraping job failed. See exception: ')

print(e)

print('Starting scraping')

for_feed = goodreads_rss(url)

post_array = []

for each in for_feed:

post = f'<div class="book-card"> <div> <div class="book-image">' \

f'{each["image"]}' \

f'</div> <div class="book-info"> <h3 class="book-title">' \

f'{each["title"]}' \

f'</h3> <h4 class="book-author">' \

f'{each["author"]}' \

f'</h4> <p class="book-details">' \

f'Published: {each["published"]} | Pages:{each["pages"]}' \

f'</p> <p class="book-review">'

if each["my_rating"] != "0":

post += f'My Rating: {each["my_rating"]}/5<br>'

post+= f'{each["my_review"]}' \

f'</div> </div> <div class="book-description"> <p class="book-summary">' \

f'Description: {each["desc"]}' \

f'</p> </div> </div>'

print(post)

post_array.append(post)Josh Miller aka “Ramen Junkie”. I write about my various hobbies here. Mostly coding, photography, and music. Sometimes I just write about life in general. I also post sometimes about toy collecting and video games at Lameazoid.com.