100 Days of Python, Projects 51-53 #100DaysofCode

Here we are now with a few more automated bot tasks. It’s been a fun series of lessons, though I enjoyed using Beautiful Soup more then Selenium. Selenium runs into too many anti-bot measures on the web to be truly effective. I mean, it’s definitely a useful too, but in my experience, it’s not reliable enough. BS seems to be much more effective, though it can’t really interact with pages.

In the long run, I think I am more just irritated by “clever bull shit” on web pages that makes both pieces of software a pain to work with. Take Instagram, none of the classes or ids are anything but jumbled characters. The code feels like it was written by a machine, and it probably was.

Also, this round is a bit shorter than before because the course is veering off into a new direction with Flask Apps, so it seemed appropriate to wrap things up on the Automation Section of the Projects.

Day 51 – Twitter Speed Complainer

This project is great, because this is something I have tried to run from other people’s code but it never seems to actually work. Now, I just have my own code to run.

EZ Mode.

It will need something with a desktop to run it on, but I have a while Windows PC for running random shit and a mess of Raspberry Pis. I don’t even care about the complaining part, in fact, I would rather not, I just want to track Internet speed. I may even change this ti push to a spread sheet or database or something later.

But for now, it Tweets.

So, the Speed test part was easy, though I used SpeedOf.me instead of SpeedTest.net, because SpeedTest.net supposedly will give dodgy numbers by partnering with ISPs and putting servers in ISP data centers. I just prefer SpeedOf.me mostly, it’s cleaner.

The Twitter part was tricky… ish… So, a common problem I keep running into with Selenium, is it thinks my Bot Programs, are Bots.

I’m so offended for my Bots, accusing them of being Bots. They run into captchas and email verifications and just flat out fail to log in or load half the time. It makes sense, captchas and email verifying exist, 100% to stop people from abusing things like, Selenium. Fortunately, Twitter Bots is one thing I do have a fair amount if experience with. I wrote one ages ago that just tweeted uptime of the server. I wrote one script that would pull lines from a text file and tweet them out at an interval. I have another Python based bot that tweets images. What do these Bots do differently? They are 100% Bots, running with the proper Twitter Bot Based AI, and labeled as such.

So, since Selenium was being a pain to deal with using Twitter, I pulled out my Image Posting Bot code and scavenged out the pieces I needed, which was about 4 or 5 lines of code. It uses a Python Library called Tweepy. In order to use Tweepy, you have to use the Twitter Developer console to get API Keys, which I already had.

Day 52 – Instagram Follower

Another almost useful project. For this project, you open up Instagram and log in, then it opens an account of your choosing, and follows, anyone following that account.

Now, while I have a love/hate relationship with Instagram, I am not super interested in cluttering up my feed with thousands of accounts. So, while I did complete the task, I set it up to ONLY follow the first 10 accounts. I also added a check to make sure I wasn’t already following said account.

I may revisit this again later with other, more useful ways to interact with IG. Maybe instead of following random people from another account, it auto follows back. Or maybe it goes through “suggested” and looks for keywords in a person’s profile and follows them.

Day 53 – Zillow Data Aggregator Capstone

The final project for this section combines Selenium and Beautiful Soup to aggregate real estate listings from Zillow into a Google Spreadsheet doc. I quite liked this one actually, it’s straight forward and relatively harmless. I did run into an issue where it started thinking I was a Bot, but by that point, I knew I could successfully scrape what I needed from Zillow, so I commented out the Zillow call and replaced it with a file load using an HTML file snapshot of the Zillow page.

This was very easy to slide in as a fix because I was already pulling the source code using Selenium into a variable, then passing that variable to Beautiful Soup. It was simply a matter of passing the file read instead.

Scraping the data itself was a bit tricky, Zillow seems to do some funny dynamic loading so my number of listings and addresses and prices didn’t always match. To solve this, I added a line that just uses whichever value is the smallest. They seem to capture in order, but eventually, some fell off, so if I got 8 prices and 10 addresses, I just took the first 8 of each.

Another issue I came across, the URLs for each listing, don’t always have a full URL. Sometimes you had to add “https://www.zillow.com” to the front. It wasn’t a hard fix,

if “zillow” not in link:

link = “https://www.zillow.com”+link

There was also an issue with the links because each link shows up twice using the scrape I was using. A quick search gave a clever solution to remove duplicates. It’s essentially: list = dictionary converted to list(list converted to dictionary). A Dictionary can’t have duplicate keys, so those get discarded converting the list to a dictionary, and then that result just gets flatted back out into a dictionary.

Lastly was the form entry itself. The Data Entry uses a method I’ve used before for entering data to Google remotely, with Google Forms. Essentially, Selenium fills out and submits the form over and over for each result. I had a bit of issue here because the input boxes uses funny tags and are hard to target directly. Then my XPATHs were not working properly. I fixed this by adding two things, one, I had Selenium open the browser maximized, to make sure everything loaded. Second, I added more sleep() delays here and there, to make sure things loaded all the way.

One thing I have found working with Selenium, you can never have too many sleep()s. The web can be a slow place.

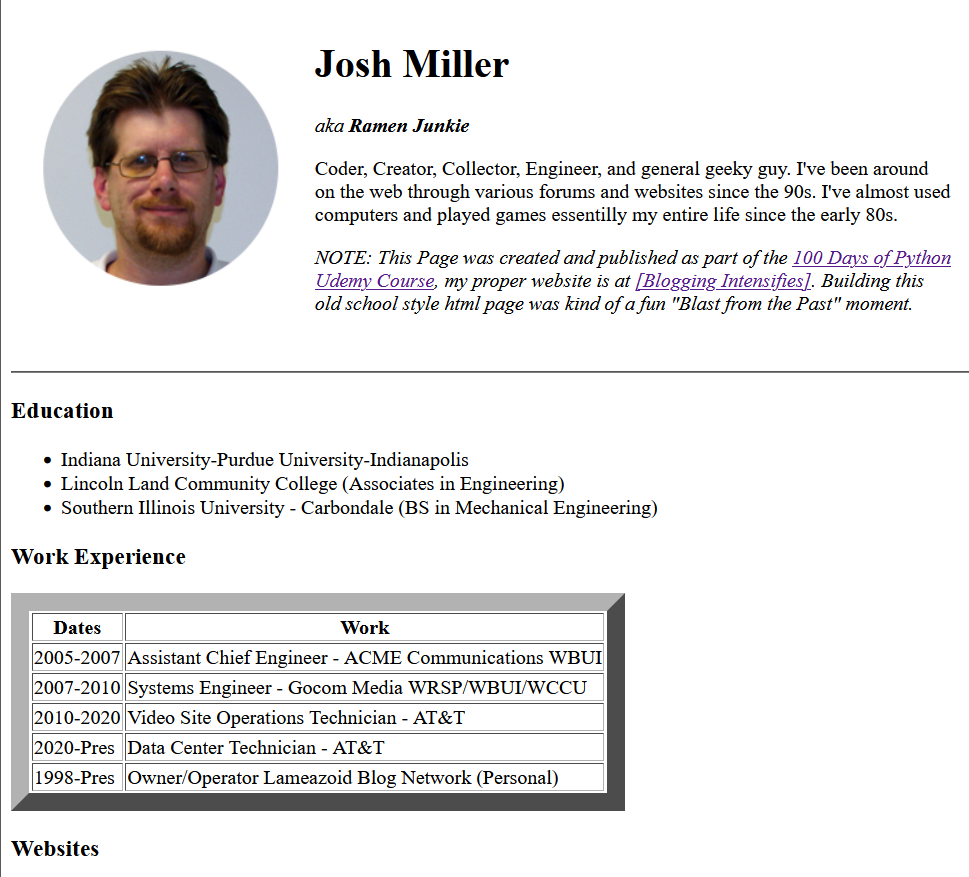

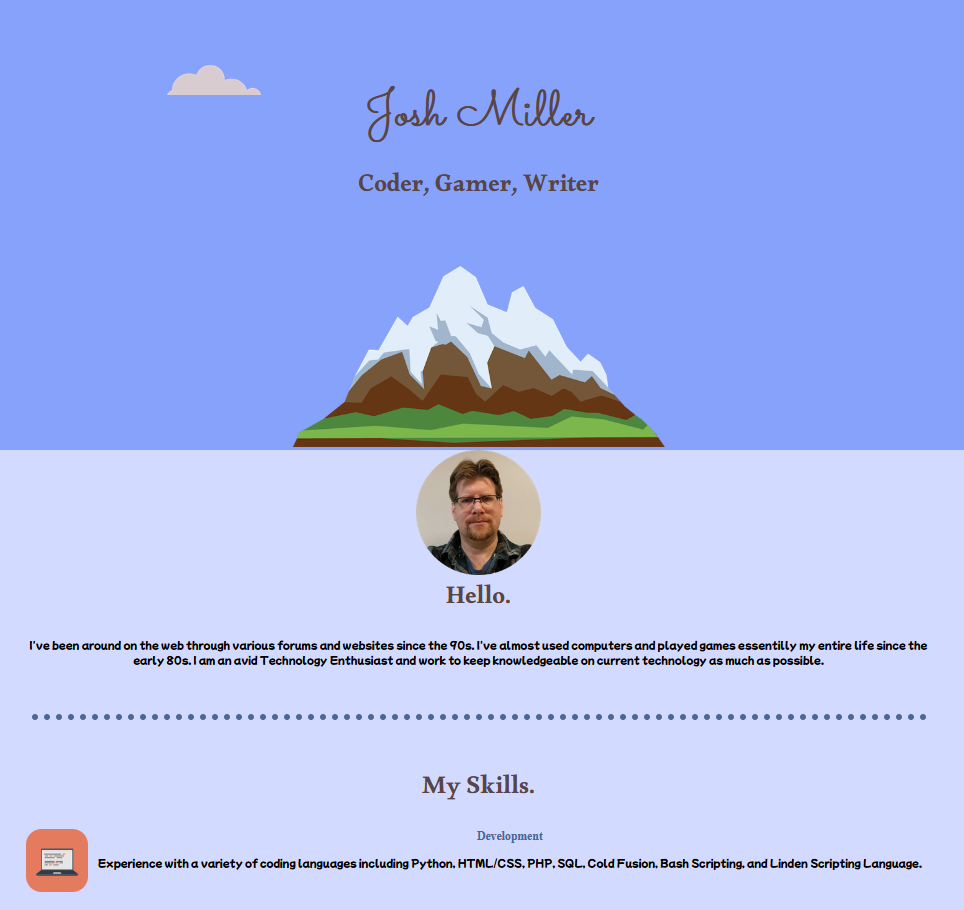

Josh Miller aka “Ramen Junkie”. I write about my various hobbies here. Mostly coding, photography, and music. Sometimes I just write about life in general. I also post sometimes about toy collecting and video games at Lameazoid.com.