Wrap-Up: Lets Try to Be Positive Edition

It’s been a hot minute since I really posted. To be blunt, I am pretty much just endlessly frustrated lately over the state of everything. Particularly the state of politics here in the US. I mean, I knew it would be shitty. I didn’t think it was going to be THIS relentlessly shitty, this quickly.

Lets take a peek inside the background at some journal topics here…

Or not. Lets try to, I dunno, be positive I guess. Whats been going on lately that is not awful.

Learning

Like last year, I started doing some leader-led training courses after work a bit. Its been, less rigorous than the ones last year, and mostly consisted of doing a Udemy course. This year’s topic so far has been AWS, or Amazon Web Services. Which probably powers like 75% of the internet or something. I am actually not sure why it was being offered because I am not sure we even use AWS at work, I think we mostly use Azure, but then, I am not part of that side of the business, so I actually have no clue what we use.

The Azure courses were full though.

As for AWS. The training specifically was to prep for the Amazon Cloud Practitioner certification. I probably should go take it. I am pretty confident I would pass it easily. The only part of the entire course I stumbled on was the weird, added later, 6-point diagram thingy. I can’t even remember the name of it. Like a lot of training of this nature, the whole course felt a bit like a sales pitch for AWS. But this particular section, which the class says was added later to the exam, felt very very very much like sale pitch nonsense.

Sales jargon and bull shit terms are my kryptonite. I can listen to and absorb some technical this and that about EC2 and S3 Blocks all day long. But this 6 points of whatever, fuck I just instantly glaze over. Don’t feed me “feel good” buzzword crap, please. Ever.

I also started doing a bit of Cybersecurity stuff (again) through some work access to something called Immersive Labs. I have no idea if its any good, so far I have just been doing basics, because it was required for work, but I seem to have full access, and it feels like something I should dig into more.

Back on the cert issue. I should go take the exam. I never did take the exams for CCNA or Pentesting for the courses I took last year. I don’t personally give a shit about certs. It shows you paid money to take a test somewhere. Feels very much in the vein of “feel-good jargon”.

Whatever the case, I have kind of wanted to better understand AWS in general for a while, and the course, overall did a great job of it. Most of it’s tools are well beyond anything I would probably ever need or use, but there were a few that were interesting.

First is the basic EC2. These are essentially their “on-demand servers.” These are not nearly as mysterious as I thought, and once you spin one up, you can log in and do all the normal Linuxey backend stuff one would expect. My thought was, of course, I could migrate my current web stack to AWS from Digital Ocean. I’m not sure I would really save anything for all that effort through. I do not have the need for some crazy highly flexible scalable environment. I’m not concerned about performance really. I’m just running a few WordPress instances against a basic database. At best, I would probably break even on my current spend for a bunch of migration work while supporting Amazon more, which I am already leaning more and more against.

Second was S3 Buckets. This is data storage in the cloud. I’ve considered using it for my backups a few times. It seems useful for some complex cloud app or systems. Even with Glacier storage, which is not on demand retrieval and is the cheapest, I would end up spending more than my current spend there. Right now I have a Microsoft Office 365 Family Plan and a bunch of segmented syncs off my NAS. That gives me 6 TB for around $70/year, AND Office for everyone in my family. That’s a great deal. Rough estimates in the calculator on Amazon, with only my current usage, puts me breaking even with Glacier.

Lastly, and the one I am more likely to use is Amazon Lambda. This one is really interesting, and it seems to just be, a way to run scripts int he cloud. I’m not sure I would use it a lot, but I could definitely see writing some simply monitoring/notification scripts and sticking them in there running once an hour or so. You get a ton of free runs per month too, which means it probably would cost me nothing int he end.

The Fediverse

Something that has stemmed from all the crazy stupid nonsense in the world is pushing more to move to use Federated social media more. I set up a Pixelfed account, which so far is mostly just reposted toy photos from IG. I have been poking around some Lemmy instances a bit. I am trying to use my Mastodon account a bit more. And BlueSky. Though that is less independent and federated.

Being more active in the Fediverse does not have to preclude not using mainstream social media. I do kind of plan to try to just, I dunno, focus less on just endless angry news. Not to say I am going to stop paying attention, just, more, add more attention elsewhere, also. Balance the awful with the good, or something.

I honestly just, hate what seems to be happening with Social Media. I hate that the mindset is that “Social media was a mistake” and “Facebook etc are evil.”. They really are, but also, it was not always this way. Social Media is good, and can be good, but they (social media companies) get too much out of making everyone fucking mad and miserable. I want to go back to giving a shit about family and friends on Facebook. I want to follow local news without brain-dead idiots filling the comments.

Oops, I am ranting a bit. Let’s be positive.

Language

I don’t have a ton to say about my language learning, but I did want to mention it. I feel like I have crossed some sort of threshold. I am not amazing at it, but I find lately I have a much much easier time understanding Spanish, and in a fairly passive way. I also was inspired a bit by a Reddit post, and have gotten way way more aggressive with lessons. There is a lot of repetition at the point I am.

A lot.

Muchos

I have been getting a little too bored with it. My current strategy is to finish the first bubble in a section, then do the first story, then skip ahead to the exam. I don’t need 5-6 more bubbles to prepare for that exam. I got it. This has actually been working pretty well. Especially because the repetition isn’t limited to within a single lesson section, it extends beyond to future lessons.

I am not doing it as aggressively as the Reddit post person did. They did one of these per day. I am doing one of these ever 2-3 days. I have done some other languages, but I have been working on this course for too long.

I also feel like I am retaining it better, because I am less bored.

Other

I have not been up to a lot much else. Like I said, the mess of everything just kind of saps my give a shit, which is frustrating. When I have not been doing class, I have been playing Infinity Nikki, or Fortnite, and we have been rewatching all the Marvel movies. Most of that is more Lameazoid topics.

I have avoided it (somehow) but everyone in my house has been sick for the past few weeks as well. Kind of sucks because it means the shop is closed. This is a slow time of year for that anyway, at least one of the other local businesses in the area there said they close for January usually anyway. Also, the bulk of the sales for the shop are on ebay anyway. The bulk of the space is for managing ebay items, the shop portion is just a nice “bonus”.

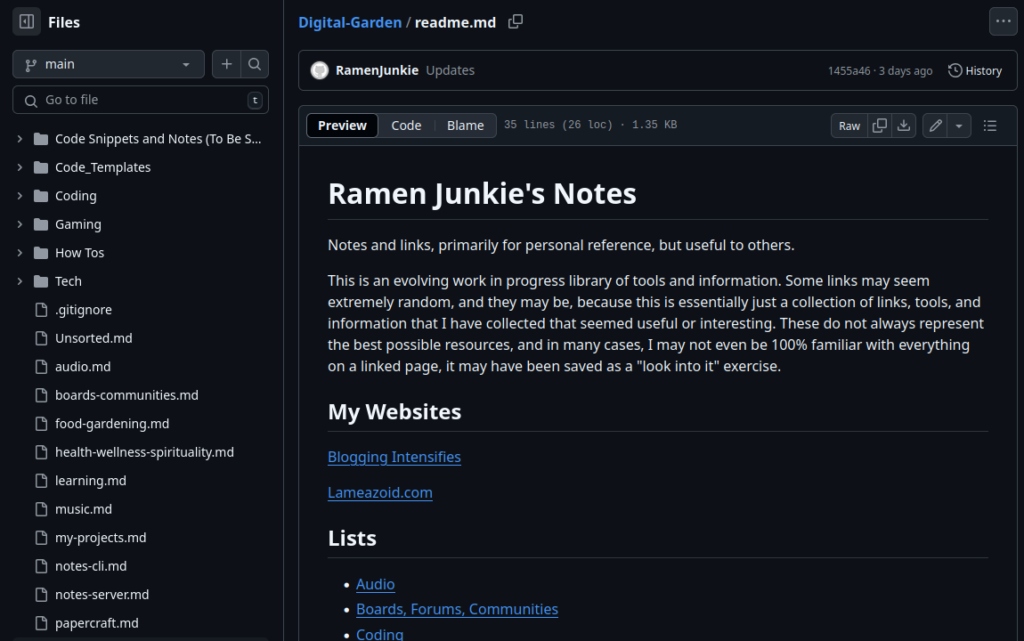

I’ve also been fitting in a lot of serious hardcore Bookmarks sorting. I kind of touched on this recently I think, I mentioned setting up Link Ace, which I have already dropped, for now. I’m just creating my big link list Digital Garden now instead. It works fine, it’s easily searchable. Another nice benefit is I am being reminded of a lot fo things I bookmarked to “look into later”. I have a nice sorted pile of ‘to-do projects” that I had completely forgotten about now. I can ignore them in a whole new way this way.’