Monday 2024-11-04 – Link List

Blogging Intensifies Link List for Monday 2024-11-04

04-Nov-2024 – How Fox Intentionally Destroyed Mike Judge’s Idiocracy, And Why its A Travesty

Brief Summary: “From King of the Hill to Office Space, Mike Judge is an expert at creating iconic comedies that find”

04-Nov-2024 – A Lesson in RF Design Thanks to This Homebrew LNA

Brief Summary: “If you’re planning on working satellites or doing any sort of RF work where the signal lives down in”

04-Nov-2024 – What’s the most popular way to prepare Japan’s beloved breakfast dish, Tamago Kake Gohan?

Brief Summary: ”

Survey results shed light on people’s personal preferences for mixing the raw egg into the rice as “

04-Nov-2024 – Second Life Grid Statistics – November 2024

Brief Summary: “Here are the latest Second Life grid statistics as of Sunday 3rd November 2024. These stats are avai”

04-Nov-2024 – Ava Max has released her festive new single ‘1 Wish’

Brief Summary: ”

Ava Max has shared a new holiday single titled ‘1 Wish’.

The track follows her recent collaborat”

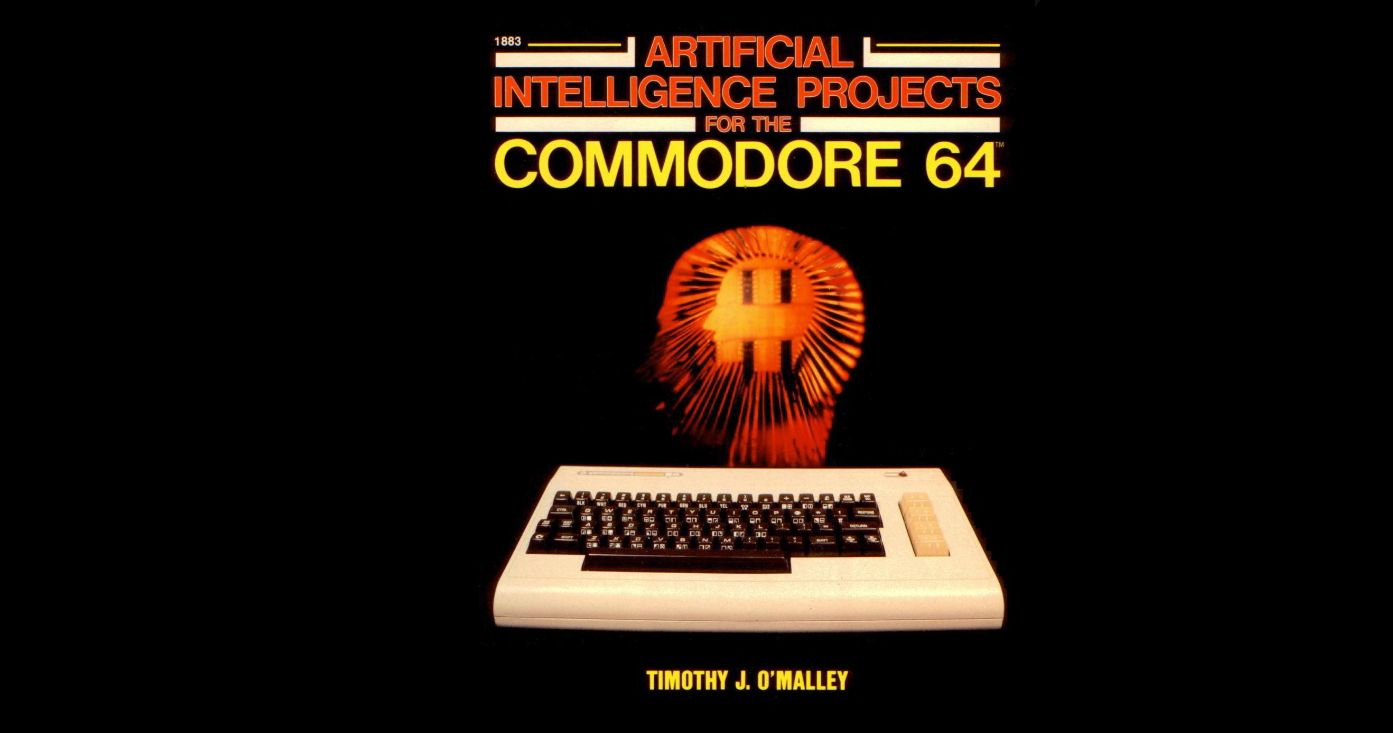

03-Nov-2024 – All You Need for Artificial Intelligence is a Commodore 64

Brief Summary: “Artificial intelligence has always been around us, with [Timothy J. O’Malley]’s 1985 book on AI proj”

03-Nov-2024 – Boobs Curry going on sale in Japan just in time for Nice Boobs Day

Brief Summary: ”

“A product that allows you to enjoy dreams, romance, and playfulness.”

Curry rice is one of Japan’”

Josh Miller aka “Ramen Junkie”. I write about my various hobbies here. Mostly coding, photography, and music. Sometimes I just write about life in general. I also post sometimes about toy collecting and video games at Lameazoid.com.